When photographing or cinematography was a naque, the various users used the lenses for their artistic and technical expression, but did not care about the crop and its consequences. Today it seems to be an insurmountable and above all dramatic problem.

Let's get some clarity on the various practical technical speeches.

In photography, the film set a standard for amateurs in compacts and professional seeds with the 24×36 format a 2×3 format, while super-professionals used the format of 6×9 backs or single-plate films of as much as 60×90 mm.

Horizontal scrolling.

At the cinema, the classic film was divided between:

- 16mm with a frame of 10.26 x 7.49mm

- super16mm with a frame of 7.41 x 12.52 mm sacrificing one of the two perforations

- 35mm with The Academy format 16 x 22mm

- 35mm with 1:85 wide format 18.6 x 21.98mm

- super 35mm with 18.66 x 24.89mm (which takes full width up to edge drilling)

Vertical Scrolling

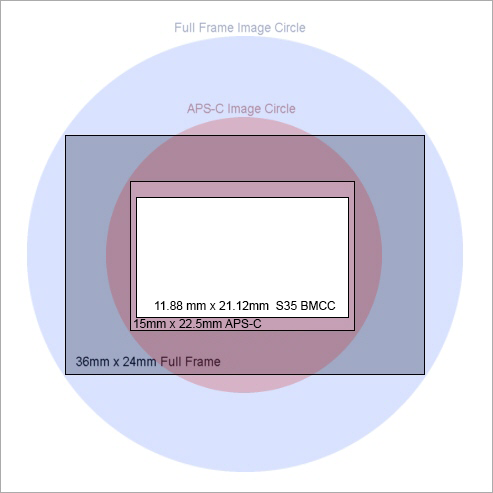

As you can see 35mm photographic film and film share only the width, but being used in a perpendicular sense one to the other are not absolutely comparable.

Today in digital photography has created several new formats to save on both lenses and sensors.

- The 24 x 36 is standard format, it has been renamed FullFrame

- The 15.7 x 23.6 is the Aps-C format, born in the cheapest range (which actually varies between houses by a few mm, for example canon reduces it to 14.8 x 22.2)

- 13.5 x 18 is the micro four-thirds format created by the Olympus and Panasonic and Zeiss consortium

this means that the focal plane where the image is projected from a Fullframe lens (24x36mm) is smaller than and this involves a number of aesthetic-practical changes:

- if the lens is larger than the sensor, it only takes one serving, so the viewing angle is narrower.

- the captured light is lower so the lens is fast (open diaphragm) so it is not able to express itself at its best.

- often to capture a greater angle you use wide-angle lenses that are pushed when narratively should not be used, so the perspective is more thrust.

Why and when does the crop problem arise?

Because the manufacturers (definitely by economic choice) who have developed the cameras and the camera prefer to hold a single fullframe mount and then mount over the same optics both in front of fullframe sensors and smaller, starting to talk about this infamous crop. However, with the so-called FullFrame as crop 0 as a reference, while there are larger formats such as 6×9, in reality the fullframe also has crop, to be precise crop 2.4.

Why is the crop feared?

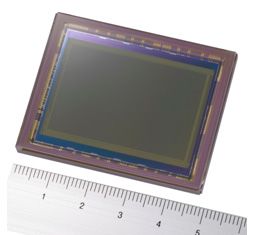

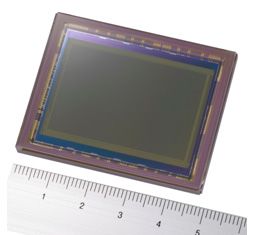

measured sensor

because most people fear the multiplication of the focal point and as such to lose visual angle, so having to push more on the purchase of higher wide-angle lenses thinking of recovering the angle and everything is in place, just having paid for a more pushed wide-angle.

but it doesn't really happen if there are not very much-pushed differences between sensors. I wrote an article with direct comparisons between a 4/3 sensor and an s35 with comparison photographs.

What exactly is the crop? and what is NOT…

The crop cuts out the part of the image ON the focal plane, so when you talk about crop you have to think that you take the original image and cut out only the middle part; it partially affects the brightness because the projection cone of the image of a fullframe lens is not taken completely and therefore since the rays are not concentrated convergingly, so even if the light collected is X, the resulting will be X/ the percentage of crop.

What is the most common thought error?

Often reads, equivalent to full frame focal length xxx

Error

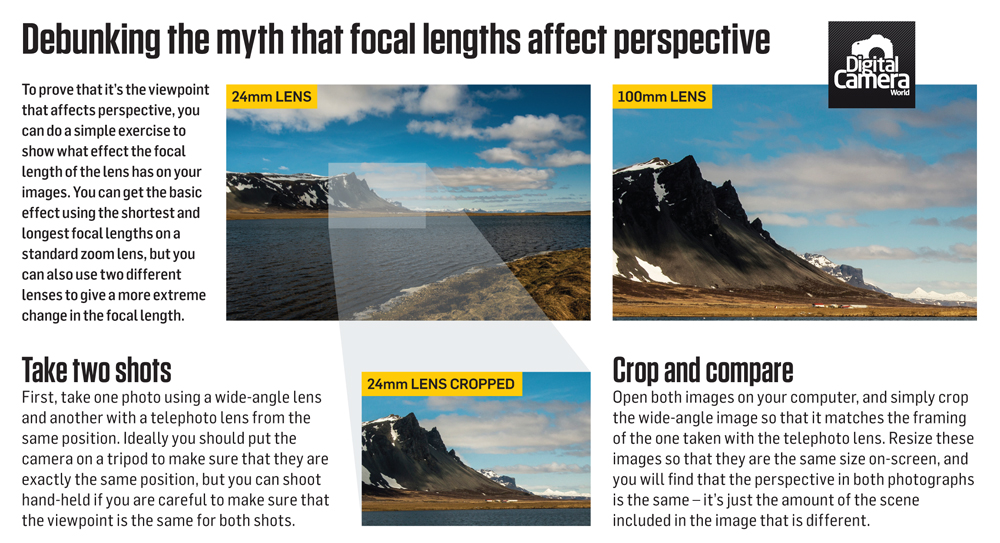

The Crop can't change the FOCAL LUNGHEZZA OF A LENT!!! It does not change the curvature of the lenses or the internal interleent distance, but only the focal plane part RACCOLTO then the focal angle.

often you read 12-35 type lenses on m4/3 format (crop x2) as the equivalent of a 24-70 on Fullframe format… but we should not speak of focal length, but of focal angle.

anyone who thinks that a pushed wide-angle like the 12 and the middle 35 mm can have the perspective distortions of a medium grandandolare 24 and a near-tele of 70 is an ignorant so great that it does not even deserve the right to write, and instead it was read in a Panasonic advertisement a few months ago about their excellent lens 12-35 2.8.

What is the biggest mistake caused by the crop?

many people, deceived by the conversion, think that the multiplication of the crop also changes the focal point and not the visual angle, so they mistakenly use the lenses and their focals.

There have always been optical rules in photography and it will not be post-production corrections that will change them.

When we talk about Normal we talk about the 50mm focal point that is defined as normal because it corresponds to the perspective distortion of the human eye.

When you photograph with a 50mm you work with a lens that if you photograph looking with one eye in the crosshairs and one towards reality we will have the same kind of vision.

There is a very simple rule in photography:

do you want to see exactly how in reality? use 50mm,

do you want to compress perspectives? go up to the telephotos.

do you want to expand the perspectives? you go down to the wide-angles.

The crop ALTERA ONLY the angle of vision, but can not change the shape of the internal lenses, nor their interseed distance between them.

Some manufacturers, such as Panasonic, add a room correction of images to give the illusion that the use of lenses not adequate to the sensor but more pushed towards wide anglers, do not create problems of prospective distortion, a pity that the algorithm works only on barrel distortion, then compensates for the ball distortion of the supergrandangolare reducing the lateral distortion, but can not alter the perspective , the distance between the planes etc, and therefore as such, the image is perceived differently.

so whether you're photographing, working with moving images you always have to take a lens for the perspective optical result that we want, and only in extreme cases you go to choose a shorter focal just to capture a wider image.

When using the wrong optics the results are obvious, especially on the neighboring elements or on the portraits, where 85mm is usually used (called the lens for the portrait par excellence) because it slightly crushes the perspective and makes the face more pleasant, as well as blurring the back to reduce background distractions.

If you use a wide-angle face will be deformed and round, the cheekbones inflated, the nose deformed, so the portrait will be more caricatured than natural. On the photo on the left just look just as you move your eyes relative to the side of your face.

If you use a wide-angle face will be deformed and round, the cheekbones inflated, the nose deformed, so the portrait will be more caricatured than natural. On the photo on the left just look just as you move your eyes relative to the side of your face.

So ultimately?

when you can choose a focal point, you do it for its perception of perspective and not for the visual angle, so choose the classic 35-50-85-135mm for different cinematic situations.

When passing through wider wide-angle lenses it should be a deliberate choice to emphasize a certain effect of space and perspective, not to have a wider visual angle.

It is no coincidence that those who photograph with prime lenses (fixed focal) move to look for the right frame, and in cinema it is known that often the sets have moving walls, but one is open to indie and frame even through the removable ghost wall.

But the depth of field with the crop?

Always with the crop it is feared that with the depth of field you will lose, memories of the half-format in photography or the super8 in the amateur film, but are again errors dictated by the superficiality of evaluation. It all stems from the fact that often in small sensors to manage the framing you use wide-angle lenses of which the focal angle is expressed and not the focal length, forcing the depth of field, so it is easier to have everything in focus, and more difficult to blur, BUT…

NOTE ALL this happens ONLY when we reason with the focal angle and not with the focal length.

In fact, those who do this kind of demonstration reasoning showing similar photographs made with same lens with different sensors, but being there the middle crop is not physically possible, so the self-styled demonstrator to cover the same focal angle has moved back, but this violates the rule of comparison, because as all professional photographers know, one of the elements of depth of field management is the distance of the subject put in focus , so if I have a very strong crop of a lens like a Nocktor 0.95, and to have the same focal angle I move back 5 meters from the subject it is easy that I go in hyperfocal, so it is not the sensor that blurs less, but the traditional optical principles on which the photograph is based, even if he took a photo with a 6×6 would have less blurred.

To confirm this speech I can attach trivially some photograph made with micro 4/3 (sensor with crop 2) that despite that can express remarkable nuances without problem, because it is the lens that blurs, not the sensor….

-

-

-

35mm 1.4 Samyang

-

-

Lumix 20mm 1.7

-

-

35mm 1.4 Samyang

-

-

Minolta Rokkor 50mm 1.4

-

-

Fiori

Lumix 20mm 1.7

-

-

-

-

-

-

-

And who says otherwise?

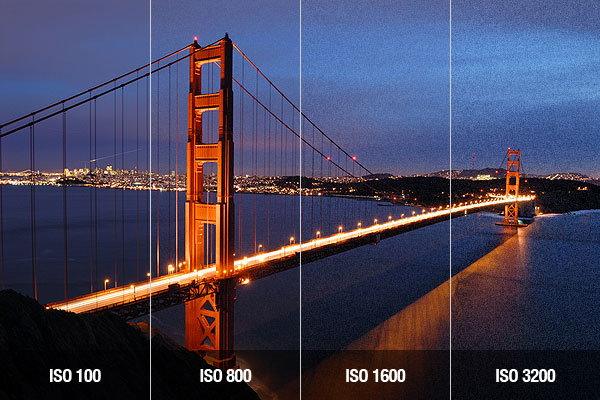

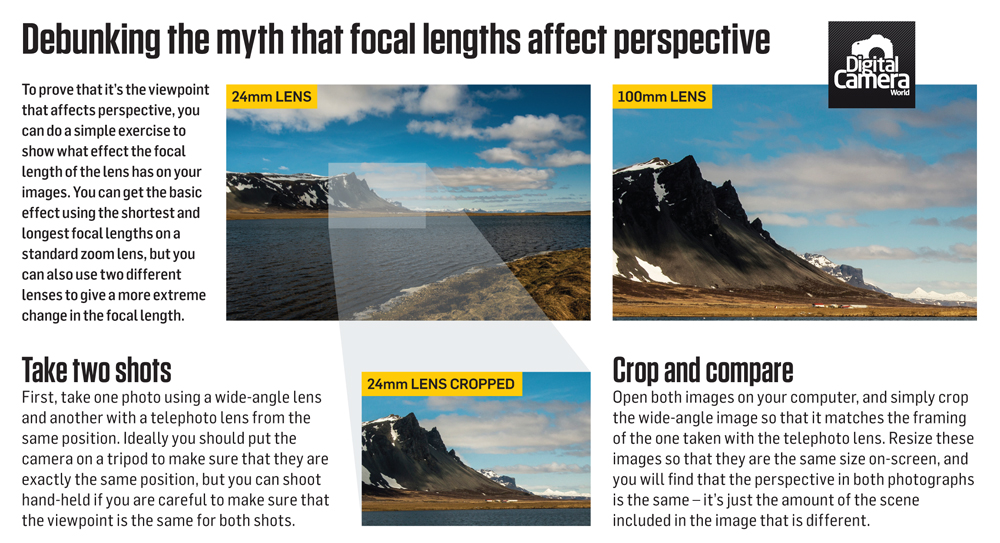

then come out articles, like what you can see above, that think they try the opposite, proving even more lack of common sense… they take an image of a panorama made with a wide-angle and compare a faraway element with the same shot made with the canvas, and they think to show that the perspective distortion depends not on the optics but on the distance bar position… while the whole thing is the sum of the two things.

by the way taking the central part of the image the distortion is even less noticeable and therefore even more useless and false as a comparison. They also do not explain why the elements with the rise of the focal point are close to each other…

[ironic mode on]maybe because if you move away you get closer to each other to be closer to you… [ironic mode off]

take a picture with this and I challenge you to take any part of the image and replicate it with a canvas, however small you take it, the light collection curve involves a distortion so strong that it cannot be matched by any compensation factor.

comparison of items up close

The distortion of the perspective is related to the angle that is covered by the lens, so by the shape itself, the greater the curvature, the greater the dilation of the perspective, so it is normal that if you take with a wide angle a very distant element it covers such a narrow angle of the lens that the perspective distortion is reduced or nothing. But these kinds of demonstrations do not, because they can not, with elements closer to the body chamber, so where you feel a stronger difference.

I also challenge these self-styled experts to replicate with a wide-angle the repositioning of the elements on the planes like the branches behind the girl in the second photograph simply by shifting the point of view.

I also challenge these self-styled experts to replicate with a wide-angle the repositioning of the elements on the planes like the branches behind the girl in the second photograph simply by shifting the point of view.

Too often these statements are made by finding an exception to the focal rule such as the very distant shooting of the elements, where the different characteristics between short and long focals are reduced, just take elements close to a few meters… and you immediately notice that it is impossible to achieve the same result.

As you can see in the image to the left, when making an aps-c lens the size of the back lens that focuses light rays on the sensor.

As you can see in the image to the left, when making an aps-c lens the size of the back lens that focuses light rays on the sensor.