For so many years, I have been bored with the nonsense about the size of the sensor, about the fact that it is essential to have at least a full frame (and cinema has always been made with the s35 or aps-c about the size) otherwise the images are ugly, there is no three-dimensionality, not enough light gathering etc etc

You know the funny thing? I have debunked all these myths over the years with explanations, technical proofs of what really makes the difference and what changes three-dimensionality, spaces, light… the fact that there are those who work and those who have to take measurements…

The sad thing is that in 2025 we still have this myth, but nobody has figured out what the real difference with a bigger sensor is, and what the only real reason a bigger sensor makes a difference on image quality is, and it’s nothing I mentioned above.

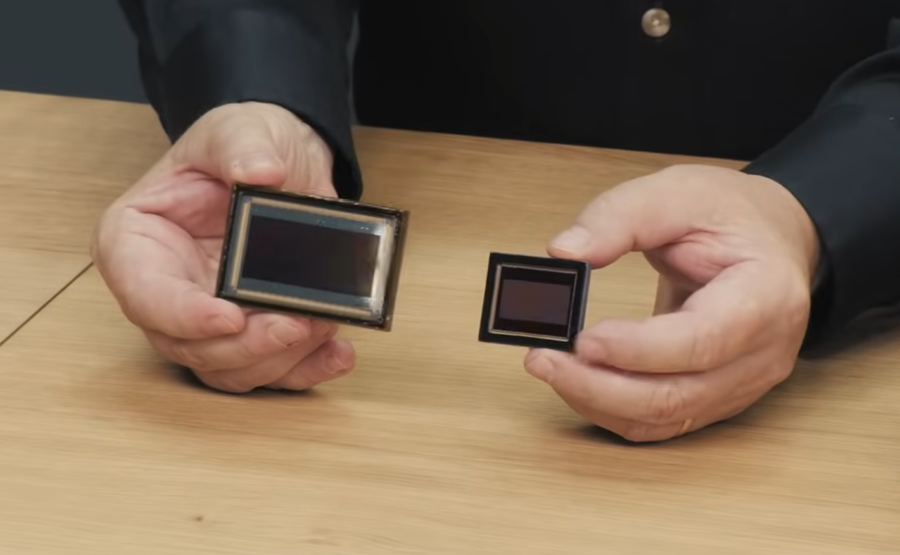

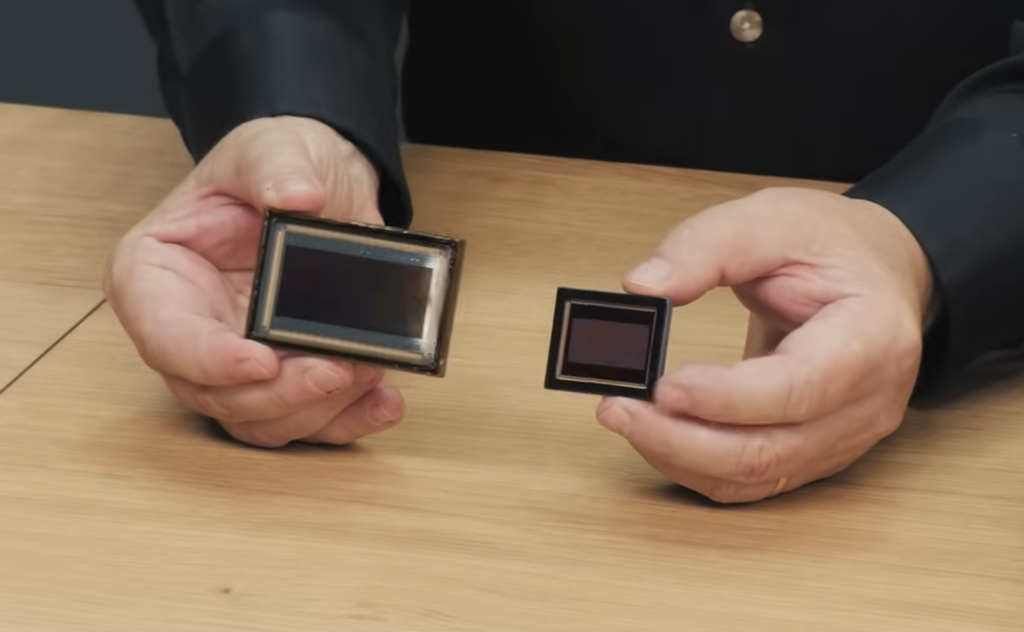

The topic has come up again today due to the fact that Blackmagic launched both the 12k cine and the 17k with a 65mm sensor and everyone repeating the usual mantra, but… there’s a reason those sensors are bigger, and Blackmagic in this case just went down a familiar path.

In fact several companies were aware of this, which is why the Arri Alexa had 3.5k sensors in open gate and then dropped to 2.7k in 1.85 shooting.

Sony when they made certain video-oriented cameras like the Sony A7S2 onwards, even though they had the R (resolution) series for video they had a less resolved series in relation to the sensor size, and all this not to gather more light, as many have mistakenly written, but because of a principle that old photographers knew well and that many have forgotten today: diffraction.

What diffraction is and how it is calculated

Diffraction is that optical phenomenon whereby given a certain size of focal plane/sensor, given a certain size of hole through which light enters, there is a physical limit that cannot be altered, whereby when the hole (diaphragm) is reduced, the light will be projected incorrectly onto the surface, giving rise to the phenomenon of Diffraction, which will cause a less sharp image.

In practice? depending on the size of the sensor, the resolution of the sensor, and the value of closing the diaphragm, if you close it more than a certain amount, the image will lose sharpness.

So if we raise the capture resolution, for the same sensor size, we will have to keep the aperture more open by a certain amount, otherwise the image will be less sharp.

So if we want to shoot in deep focus, we have to take this factor into account and know what the limit of our camera/lens is, otherwise we may find ourselves in an absurd situation where closing the aperture will reduce the final sharpness of the image.

And I also fell for this by taking this image, where I closed the aperture too tightly.

The Rayleigh criterion

mathematical method to give a number at the minimum resolvable detail when an imaging system is diffraction-limited.

θ=1.22λ/D

Where θ is in radians, λ is the wavelength of green light; .55μm , D is the pixel pitch; 2.2μm.

The diameter of the air disc is approximately 2-3 times the pixel pitch. This is because the pixel pitch is the distance between the centre of two adjacent pixels, the smallest resolvable detail, and the diameter would cover at least two pixels. F/stop is 1/2 of the inverse of numerical aperture (NA), because θ is in radians.

We write this value as the smallest resolvable f/stop:

f/stop = 2D/2.44λ

That is, two pixels covered and 2 radians make 1 numerical aperture, the inverse of which is f/stop.

For the UMP12K it is 2 x 2.2 / 2.44 x .55 = f/3.3

For the Cine12K it is 2 x 2.9 / 2.44 x .55 = f/4.3

For the Pocket 6K 2 x 3.8 / 2.44 x .55 = f/5.7

For the UMP4.6G2 it is 2 x 5.5 / 2.44 x. 55 = f/8,2

For the BMCC6K 2 x 6.0 / 2.44 x .55 = f/8.9

If we accept 3 pixels covered by the air disc instead of just two, we can lower the stop to achieve:

For the UMP12K, that’s 3 x 2.2 / 2.44 x .55 = f/4.9

For the Cine12K, 3 x 2.9 / 2.44 x .55 = f/6.5

For the Pocket 6K, 3 x 3.8 / 2.44 x .55 = f/8.5

For the UMP4.6G2, 3 x 5.5 / 2.44 x .55 = f/f12.3

For the BMCC6K, 3 x 6.0 / 2.44 x .55 = f/13.4

As can be seen, diffraction places a limit on the smallest physical details. As the pixel pitch becomes more dense, the sensor will exceed the resolution of the lens at smaller f/stops.

From these simple calculations we can deduce two fundamental things, knowing our camera allows us to use it at its best, knowing the basics of photography allows us to avoid a series of practical technical problems that cannot be taken for granted.

From this simple calculation we have also understood why it is better to work with ND filters of various types and not to close the diaphragms too much if we work with very dense sensors, otherwise it is useless to have high resolutions, because we will waste them optically.

Now the question that will arise in many heads should be: but what about all those phones with 100 mpx and a sensor the size of a pinhead? 😀 Good calculations